Non-human primates are valuable animal models for behavioral and cognitive research, especially for researchers investigating neurodegenerative diseases. Behavioral data is a critical component of these investigations, and new research has sought to develop an automated analysis program to optimize behavioral monitoring.

Image Credit: Blueton/Shutterstock.com

Since behavior and neural activity are linked, behavior observation can be used to determine what types of neural activity changes have occurred. As a result, many studies have used video recording or researchers’ direct observation to measure non-human primate behavior.

In work published in sensors, MDPI a single depth imaging camera was used to create an automated behavioral pattern analysis program, particularly for a non-human primate in a home cage.

Spatial information regarding the orthogonal direction of the 2-D object plane from depth photos can be obtained, which is a critical factor in evaluating monkey behavior in three dimensions.

Researchers created the algorithm to monitor and calculate the center of the object and height information collected from the depth photos to automatically measure the monkey’s activity.

Sitting, standing, and pacing are the three categories of quantifiable behaviors. The automated algorithm was used to determine the length of each behavior.

Researchers tested the program’s reliability by measuring the actions of nine monkeys and comparing the findings to two observers’ manual measures.

A single depth imaging camera was employed in this procedure, and measurements were taken in individual home cages where the monkeys previously resided.

Methodology

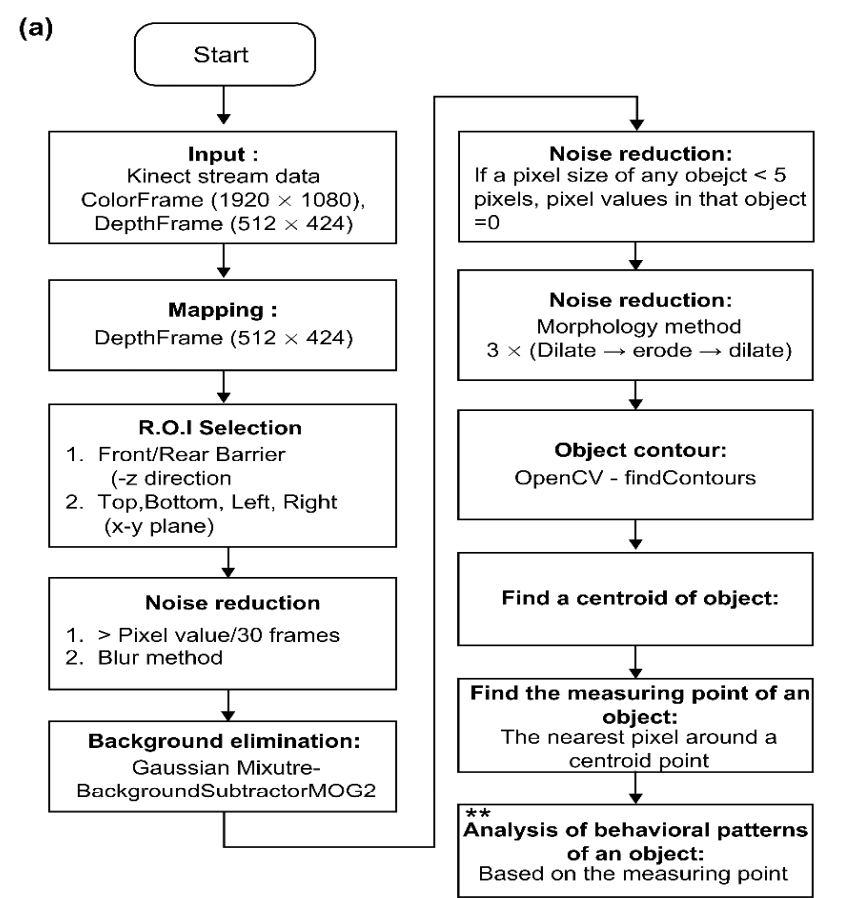

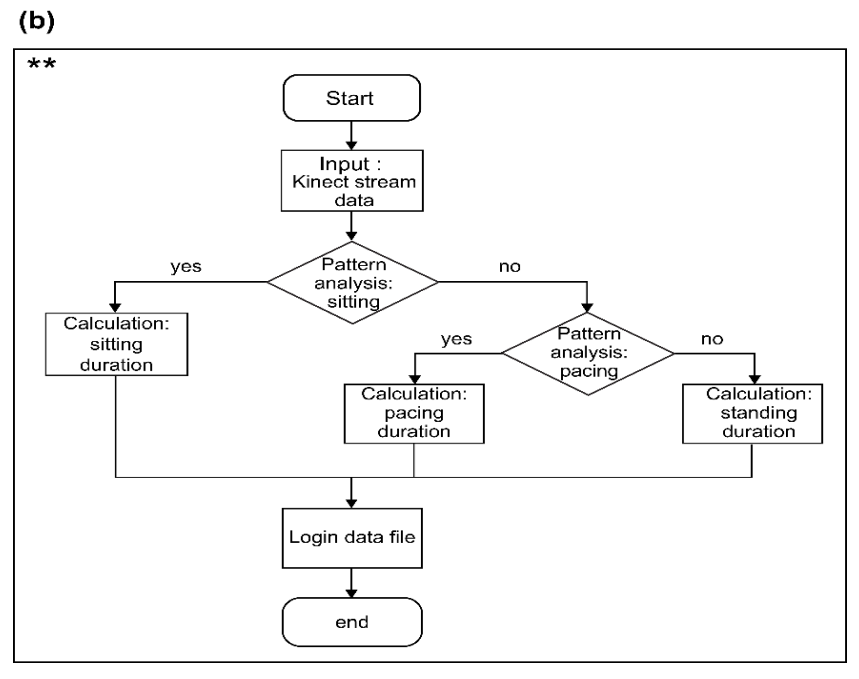

Background removal and object-tracking methods, such as the Gaussian mixture model, centroid, morphological filtering, noise reduction, and classification algorithms, were used in the automated analysis program for classifying behavioral patterns. Figure 1 shows the procedures that made up the automated program.

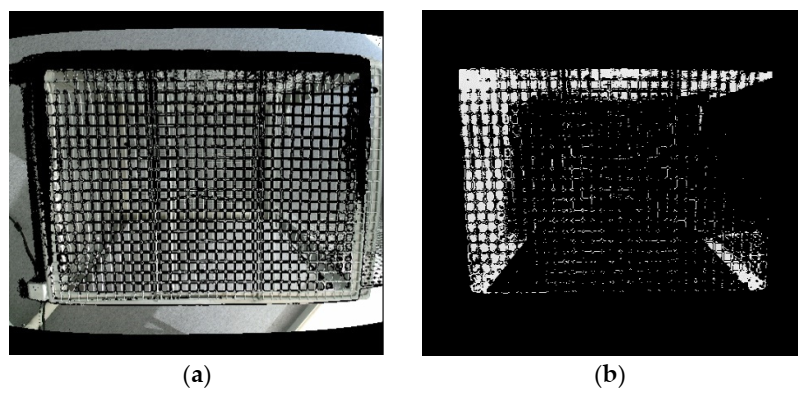

Figure 1. (a) Merged image from ColorFrame + DepthFrame and (b) Image after applying region of interest (ROI). Image Credit: Han, et al., 2022

Researchers employed two types of camera modules to capture object motions: an RGB image sensor for gathering ColorFrame images and a depth sensor for getting DepthFrame photographs.

Researchers combined ColorFrame and DepthFrame to map two different resolution images, as shown in Figure 1a. In Figure 2, “Rear Barrier” and “Front Barrier” were deleted to choose the region of interest (ROI) inside a cage in the z-direction.

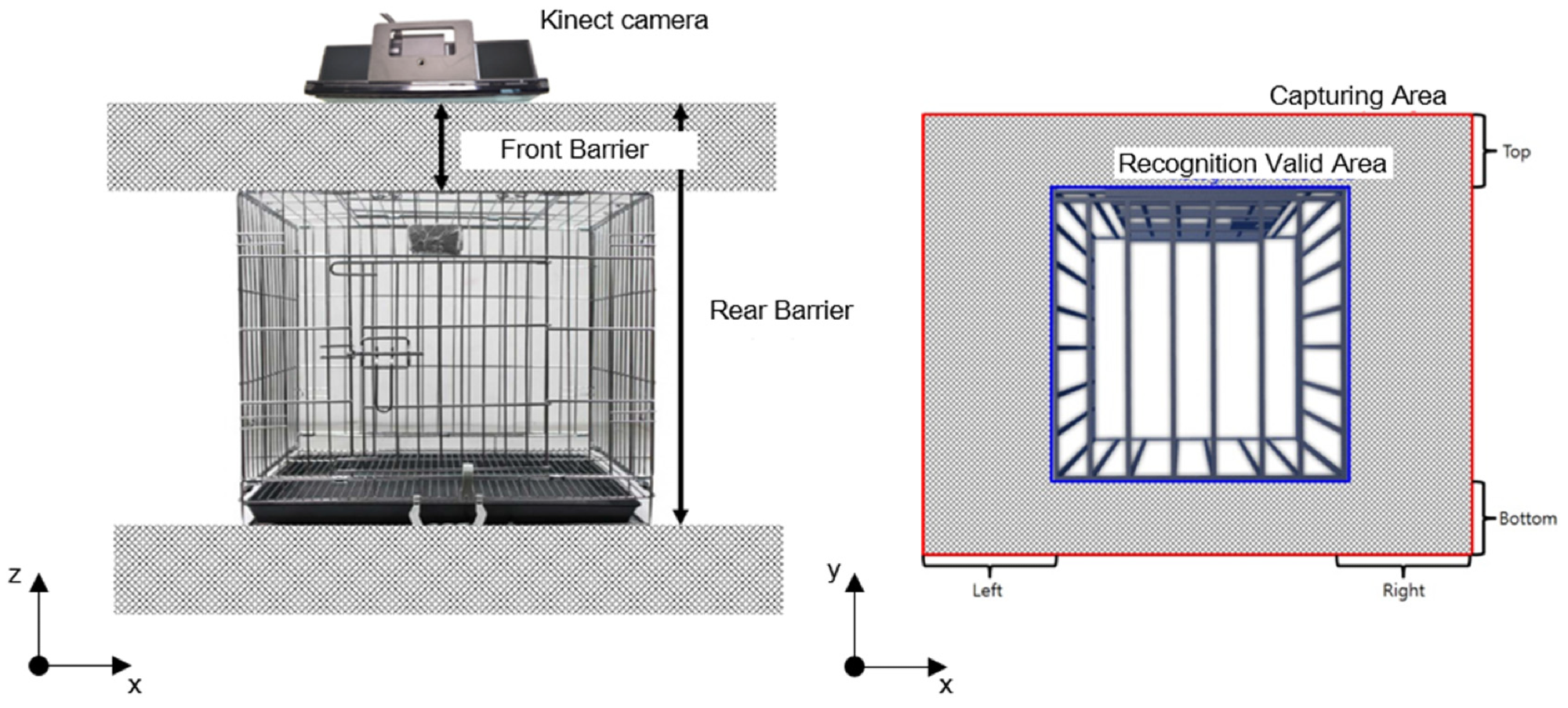

Figure 2. A three-dimensional R.O.I selection inside a cage. Image Credit: Han, et al., 2022

Image data outside of a cage was removed to choose the ROI inside a cage in the z–y plane. Figure 2 depicts the removal area as a grey rectangular box with “Top,” “Bottom,” “Left,” and “Right” written on each side.

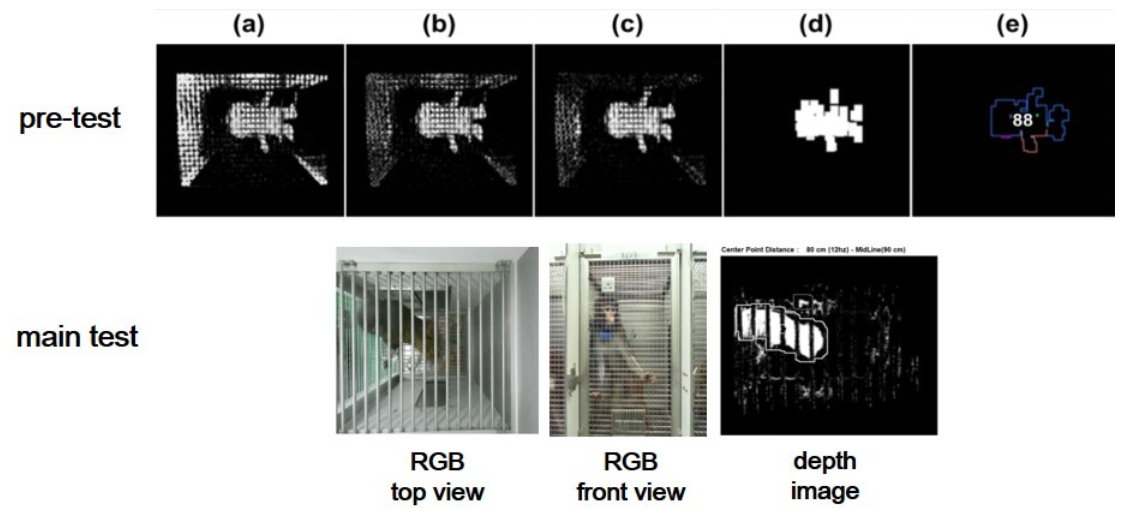

In Figure 3a, the pixels that had a slight change equal to the prescribed value were eliminated after recording 30 frames of image data. Then, in Figure 3b, the “blur” function was employed to remove slight noise from the image data.

Figure 3. Automated program process for pre-test: (a–d) background reduction and noise elimination, (e) centroid, centroid area, and measuring point identification. RGB and depth images from the main test. Image Credit: Han, et al., 2022

In Figure 3c, the “BackgroundSubtractorMOG2” in OpenCV was used to remove background by separating the targeted object from raw pictures using the Gaussian mixture approach. In Figure 3d, the “dilate” processing transformed positive pixels to the image’s brightest value, while the “erode” processing changed negative pixels towards the image’s darkest value.

The rectangular area with one side of 5~10 pixels is denoted as “the centroid area,” and “the smallest depth value” inside the centroid area roughly represents the skull of an experimental monkey illustrated in Figure 3e. Figure 4 depicts the logical flow chart that coincides.

Figure 4. (a) Flow chart representing object contour creation, (b) Flow chart representing the behavioral pattern analysis. (Symbols in (a) and (b) indicate the process for the analysis of behavioral patterns). Image Credit: Han, et al., 2022

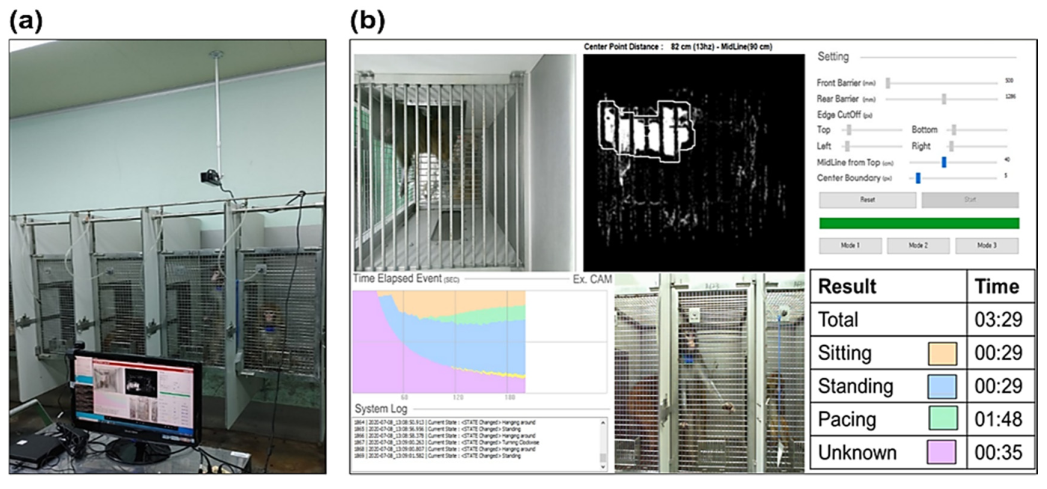

Figure 5 shows behavioral data acquired for 15 minutes using a Kinect camera on monkeys in a stainless-steel-wire-mesh home cage. There were three categories of activity: sitting, standing, and pacing. Table 1 shows how each activity pattern is defined. The duration of each activity was measured. Two professional examiners checked the data for accuracy.

Figure 5. (a) Camera and computer system setting in the experiment: the camera was installed above the home cage and the number and during of the three behaviors were measured using the automated program. (b) User interface for the automated behavioral pattern analysis program. Image Credit: Han, et al., 2022

Table 1. Ethogram (definition of behavior). Source: Han, et al., 2022

| Definition |

| |

Program |

Observer |

| Sitting |

When positioned for more than 1 s below the specified height without movement |

Sitting without pacing and lasting longer than 1 s |

| Standing |

When positioned for more than 1 s above the specified height without movement |

Standing without pacing and lasting longer than 1 s |

| Pacing |

When the measuring point moves more than 1 s |

Pacing lasting more than 1 s |

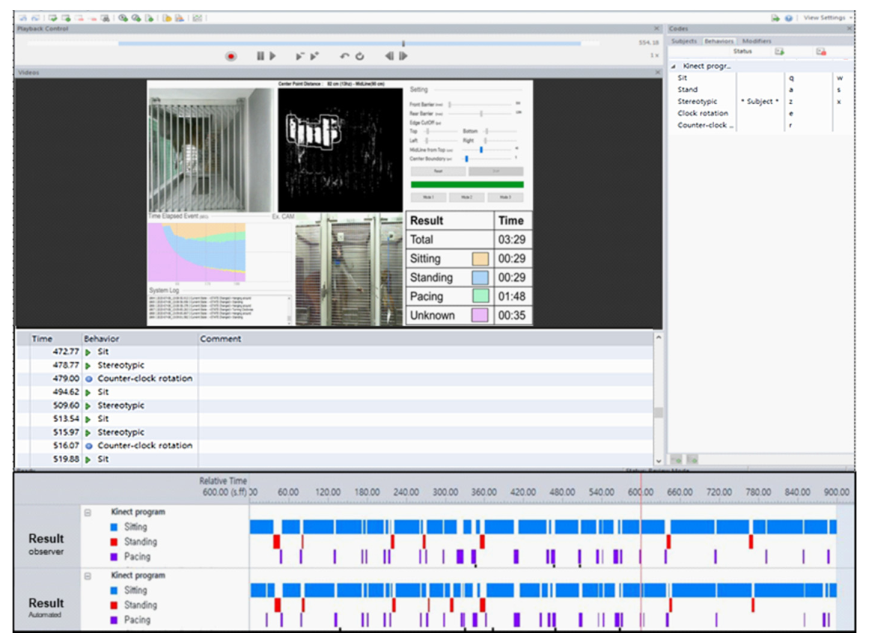

Observer XT is a semi-automated behavioral event coding and data analysis program. While watching a video clip, the user must manually decide on behavioral patterns. It can carry out all of the operations depicted in Figure 6, including target behavior setting, event coding, and outcome analysis.

Figure 6. Program verification through Observer XT. Manual ratings of behaviors using Observer XT software and results including behavioral event and time. Image Credit: Han, et al., 2022

Results and Discussion

The total sitting duration was measured by observer #1 and the automated program for evaluating depth photographs at 535.89 and 543.78 seconds, respectively. Table 2 shows that there were no significant differences between the two measurements.

Table 2. Comparison of the measurement analysis between the automated program and observer #1. Source: Han, et al., 2022

| Action |

Observer #1 |

Program |

t |

p-Value |

| Mean ± SE |

Mean ± SE |

| Sitting |

535.89 ± 99.92 |

543.78 ± 98.19 |

−1.268 |

0.240 |

| Standing |

68.00 ± 14.94 |

55.67 ± 15.93 |

1.933 |

0.089 |

| Pacing |

296.00 ± 100.10 |

304.78 ± 100.38 |

−1.963 |

0.085 |

Mean ± SE: mean ± standard error. t: paired t-test.

Total sitting time was measured by Observer #2 and the automated program for evaluating depth photos at 529.67 s and 543.78 seconds, respectively. Table 3 shows that there was no significant difference between the two readings.

Table 3. Comparison of the measurement analysis between the automated program and observer #2. Source: Han, et al., 2022

| Action |

Observer #2 |

Program |

t/z |

p-Value |

| Mean ± SE |

Mean ± SE |

| Sitting |

529.67 ± 103.99 |

543.78 ± 98.19 |

−1.324 |

0.222 |

| Standing |

62.00 ± 16.76 |

55.67 ± 15.94 |

1.115 |

0.297 |

| Pacing † |

308.33 ± 108.80 |

304.78 ± 100.38 |

0.340 |

0.743 |

Mean ± SE: mean ± standard error. t: paired t-test. †(z): Wilcoxon signed ranks test.

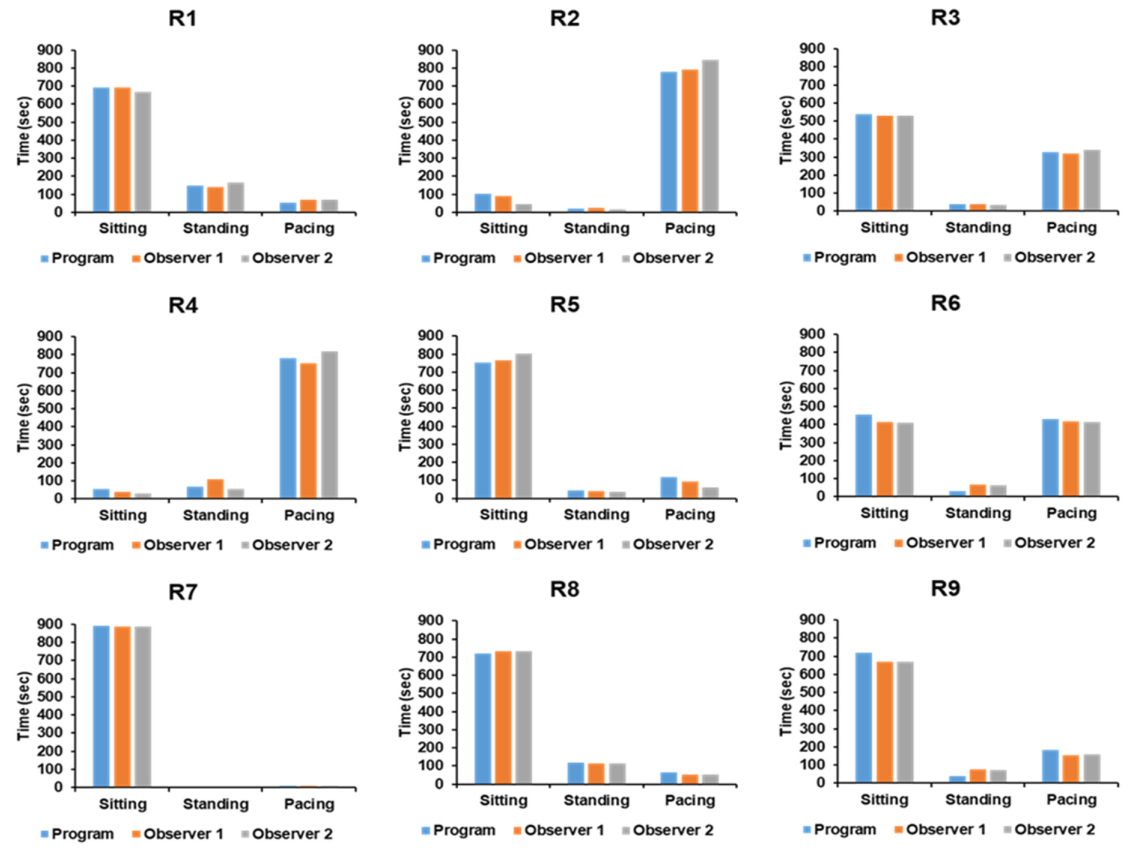

As indicated in Table 4 and Figure 7, the findings of two observers’ measurements and the automated software for assessing depth photographs were statistically identical.

Figure 7. Comparison of measurement analysis from the automated program against two observers for each subject. Image Credit: Han, et al., 2022

Table 4. Comparison of raw data between measurement analysis from the automated program and two observers for each subject. Source: Han, et al., 2022

| |

Subject # |

R1 |

R2 |

R3 |

R4 |

R5 |

R6 |

R7 |

R8 |

R9 |

| Sitting |

Program |

690 |

102 |

536 |

54 |

751 |

456 |

891 |

719 |

717 |

| Observer #1 |

691 |

88 |

531 |

38 |

766 |

414 |

887 |

732 |

671 |

| Observer #2 |

667 |

46 |

528 |

14 |

801 |

408 |

889 |

734 |

669 |

| Standing |

Program |

148 |

19 |

38 |

68 |

42 |

32 |

0 |

117 |

37 |

| Observer #1 |

138 |

23 |

41 |

108 |

40 |

66 |

6 |

115 |

74 |

| Observer #2 |

164 |

14 |

34 |

57 |

36 |

62 |

5 |

115 |

72 |

| Pacing |

Program |

54 |

780 |

326 |

779 |

116 |

432 |

9 |

64 |

184 |

| Observer #1 |

71 |

789 |

321 |

752 |

95 |

419 |

7 |

53 |

155 |

| Observer #2 |

69 |

844 |

339 |

826 |

61 |

412 |

8 |

52 |

159 |

This study proposed using a single depth imaging camera to measure primates’ behavioral patterns in a private cage. The automated algorithm developed is extremely accurate for measuring and classifying certain non-human monkey behaviors in their cages.

This automated program properly classified chosen behavior and might be utilized as an effective evaluation tool in disease models with prominent behavioral symptoms, such as Parkinson’s and ataxia.

Conclusion

The automated computer used in this study was unable to detect minor movements in body components like the hands and head. It classified and measured the selected big movements such as sitting, standing, and pacing.

Furthermore, this automated program was constrained and could only measure one object at a time. Also, in the suggested program, only three primary behaviors are automatically classified.

In this study, the researchers did not find any discernible difference in the measurement findings between the automated software and the observers.

The automated program is projected to give a useful analytical measurement tool for non-human primate behavior in individual cages in future behavior research.

Journal Reference:

Han, S. K., Kim, K., Rim, Y., Han, M., Lee, Y., Park, S. -H., Choi, W. S., Chun, K. J., Lee, D. -S. (2022) A Novel, Automated, and Real-Time Method for the Analysis of Non-Human Primate Behavioral Patterns Using a Depth Image Sensor. Applied Science, 12(1), p. 471. Available Online: https://www.mdpi.com/2076-3417/12/1/471/htm.

References and Further Reading

- Gibbs, R. A., et al. (2021) Evolutionary and Biomedical Insights from the Rhesus Macaque Genome. Science, 316, pp. 222–234. doi.org/10.1126/science.1139247.

- Bailey, J & Taylor, K (2016) Non-human primates in neuroscience research: The case against its scientific necessity. Alternatives to Laboratory Animals, 44, pp. 43–69. doi.org/10.1177/026119291604400101.

- Kessler, M. J., et al. (1988) Effect of tetanus toxoid inoculation on mortality in the Cayo Santiago macaque population. American Journal of Primatology, 15, pp. 93–101. doi.org/10.1002/ajp.1350150203.

- Watson, K. K., et al. (2012) Of mice and monkeys: Using non-human primate models to bridge mouse- and human-based investigations of autism spectrum disorders. Journal of Neurodevelopmental Disorders. 4, p. 21. doi.org/10.1186/1866-1955-4-21.

- Seo, J., et al. (2019) A non-human primate model for stable chronic Parkinson’s disease induced by MPTP administration based on individual behavioral quantification. Journal of Neuroscience Methods, 311, pp. 277–287. doi.org/10.1016/j.jneumeth.2018.10.037.

- Kalin, N. H., et al. (2003) Nonhuman primate models to study anxiety, emotion regulation, and psychopathology. Annals of the New York Academy of Sciences. 1008, pp. 189–200. doi.org/10.1196/annals.1301.021.

- Capitanio, J. P., et al. (2008) Contributions of non-human primates to neuroscience research. Lancet, 371, pp. 1126–1135. doi.org/10.1016/s0140-6736(08)60489-4.

- Nelson, E E & Winslow, J T (2009) Non-human primates: Model animals for developmental psychopathology. Neuropsychopharmacology, 34, pp. 90–105. doi.org/10.1038/npp.2008.150.

- Nakamura, T., et al. (2016) A Markerless 3D Computerized Motion Capture System Incorporating a Skeleton Model for Monkeys. PLoS ONE, 11, p. e0166154. doi.org/10.1371/journal.pone.0166154.

- Dell, A. I., et al. (2014) Automated image-based tracking and its application in ecology. Trends in Ecology & Evolution, 29, pp. 417–428. doi.org/10.1016/j.tree.2014.05.004.

- Krakauer, J. W., et al. (2017) Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron, 93, pp. 480–490. doi.org/10.1016/j.neuron.2016.12.041.

- Schwarz, D. A., et al. (2014) Chronic, wireless recordings of large-scale brain activity in freely moving rhesus monkeys. Nature Methods, 11, pp. 670–676. doi.org/10.1038/nmeth.2936.

- Courellis, H. S., et al. (2019) Spatial encoding in primate hippocampus during free navigation. PLOS Biology. 17, p. e3000546 doi.org/10.1371/journal.pbio.3000546.

- Labuguen, R., et al. (2019) Primate Markerless Pose Estimation and Movement Analysis Using DeepLabCut. In Proceedings of the 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Washington, DC, USA, 30 May–2; pp. 297–300.

- Liu, S., et al. (2019) Dynamics of motor cortical activity during naturalistic feeding behavior. Journal of Neural Engineering, 16, p. 026038. doi.org/10.1088/1741-2552/ab0474.

- Crall, J. D., et al. (2015) A Low-Cost, Image-Based Tracking System for the Study of Animal Behavior and Locomotion. PLoS ONE, 10, p. e0136487. doi.org/10.1371/journal.pone.0136487.

- Cao, Z., et al. (2021) OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43, pp. 172–186. doi.org/10.1109/TPAMI.2019.2929257.

- Mathis, A., et al. (2018) DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience, 21, pp. 1281–1289. doi.org/10.1038/s41593-018-0209-y.

- Fitzsimmons, N. A., et al. (2009) Extracting kinematic parameters for monkey bipedal walking from cortical neuronal ensemble activity. Frontiers in Integrative Neuroscience, 3, p. 3. doi.org/10.3389/neuro.07.003.2009.

- Bala, P. C., et al. (2020) Automated markerless pose estimation in freely moving macaques with OpenMonkeyStudio. Nature Communications, 11, p. 4560. doi.org/10.1038/s41467-020-18441-5.

- Mathis, M. W., et al. (2020) Deep learning tools for the measurement of animal behavior in neuroscience. Current Opinion in Neurobiology, 60, pp. 1–11. doi.org/10.1016/j.conb.2019.10.008.

- Graving, J. M., et al. (2019) DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. Elife, 8, p. e47994. doi.org/10.7554/eLife.47994.

- Gunel, S., et al. (2019) DeepFly3D, a deep learning-based approach for 3D limb and appendage tracking in tethered, adult Drosophila. Elife, 8, p. e48571. doi.org/10.7554/eLife.48571.

- Libey, T., et al. (2017) Open-Source, Low Cost, Free-Behavior Monitoring, and Reward System for Neuroscience Research in Non-human Primates. Frontiers in Neuroscience, 11, p. 265. doi.org/10.3389/fnins.2017.00265.

- Wang, Z., et al. (2015) Towards a kinect-based behavior recognition and analysis system for small animals. In Proceedings of the 2015 IEEE Biomedical Circuits and Systems Conference (BioCAS), Atlanta, GA, USA, 22–24 October; pp. 1–4.

- Foster, J D., et al. (2014) A freely-moving monkey treadmill model. Journal of Neural Engineering, 11, p. 046020. doi.org/10.1088/1741-2560/11/4/046020.

- Sellers, W I & Hirasaki, E (2014) Markerless 3D motion capture for animal locomotion studies. Biology Open, 3, pp. 656–668. doi.org/10.1242/bio.20148086.

- Emborg, M E (2017) Nonhuman Primate Models of Neurodegenerative Disorders. ILAR Journal, 58, pp. 190–201. doi.org/10.1093/ilar/ilx021.

- Kim, K., et al. (2020) Evaluation of cognitive function in adult rhesus monkeys using the finger maze test. Applied Animal Behavior Science, 224, p. 104945. doi.org/10.1016/j.applanim.2020.104945.