Sponsored by CSEMOct 20 2022Reviewed by Olivia Frost

Multispectral cameras can capture an image of a scene with a large number of spectral channels, making them very attractive for accurate color depiction in photography, skin, plant, or food analysis. The presented approach focuses on miniaturization and solves a shortcoming of existing multispectral camera technologies.

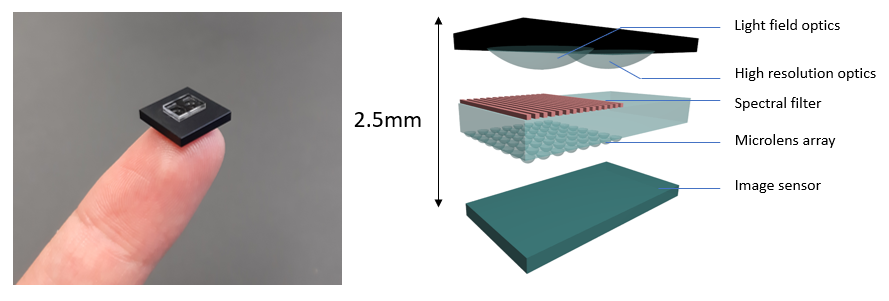

In this article, researchers introduce a design for a multispectral snapshot camera with a small size and a thickness of less than 3 mm, perfect for incorporation in IoT, wearable, or smartphone devices.

The design employs primary imaging optics and an image sensor, with nanostructured filters and a microlens array implanted in the optical path, resulting in no additional thickness to the optics.

Since the multispectral optics are manufactured and incorporated independently by the image sensor, it is suitable for pixel miniaturization. The selected filtering method is free of mosaicking effects, and the pixel resolution is not constrained by spectral needs.

Figure 1 depicts a multispectral camera schematic with a twin optics architecture1. The light-field emitted by an object point is projected onto a microlens array. Between both the imaging optics and the microlens array is a filter whose transmission spectrum is affected by the incidence angle.

The light angular dispersion is imaged under each microlens after passing through the filter. A neural network reconstructs the signal in the image sensor even under the microlenses of the array to establish a multispectral image. The other half of the image sensor extracts a high-resolution RGB signal from the sensor, which is then combined with the light-field multispectral signal.

Figure 1. Multispectral imaging optics based on imaging optics, nanostructured filters with angle-dependent transmission, microlens array and an RGB image sensor. Image Credit: CSEM (Swiss Center for Electronics and Microtechnology)

The hybrid dielectric-metallic periodic nanostructure2 underpins the nanostructured filter. The microlens array is originated by photolithography and reflow and replicated by UV imprint lithography on a substrate with absorbing microapertures.

The nanostructured filter and microlens array are positioned on the optical path at a specific distance from the image sensor to maximize spectral resolution.

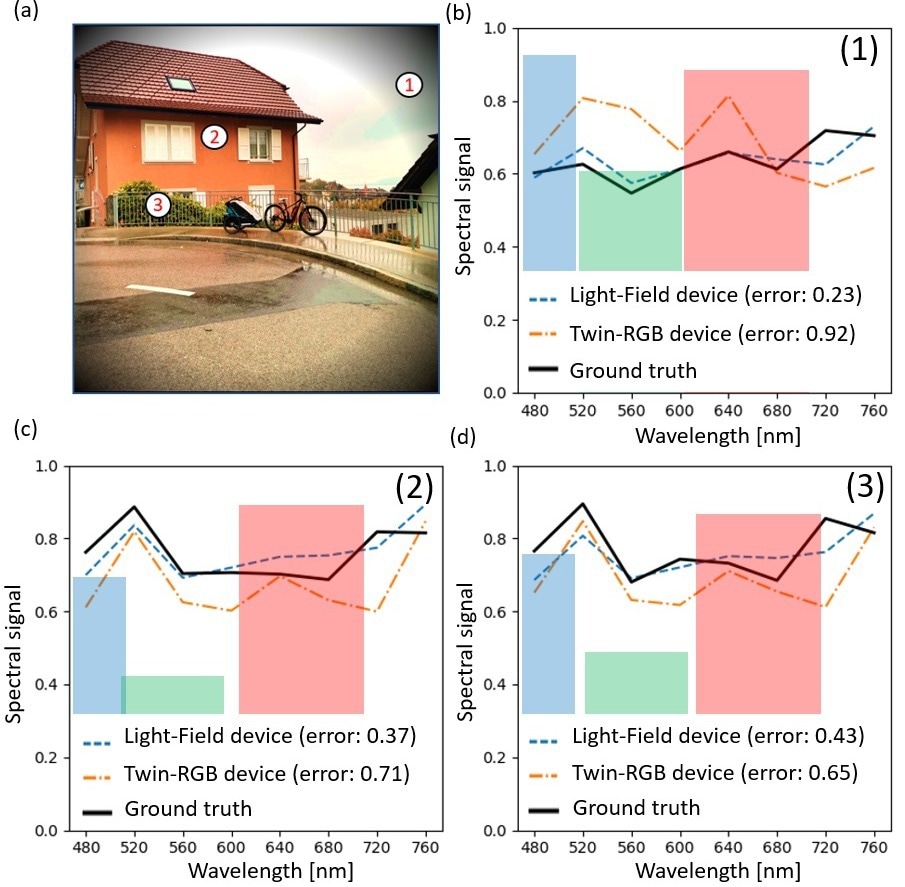

A ground truth signal is obtained for neural network training using an 8-channel filter wheel positioned in front of the high-resolution optics, with each filter having a 20 nm bandwidth. A reference device (called here twin-RGB device for simplicity) has high resolution (i.e., non light-field) optics on both sides of the RGB sensor. A U-NET-like convolutional neural network that benefitted from down and up-sampling layers to enhance the region of support has been used.

The model is currently running at 2p fps with a spectral reconstruction resolution of 712 × 712. On the reference twin-RGB device, a neural network with the same architecture is instructed for spectral reconstruction. The goal is to separate contributions from software to the hardware.

Figure 2 compares their spectral reconstruction performance. A deviation summed over the full spectrum has been estimated for the two methods regarding the spectral composition of the different signals.

Overall, the light-field architecture greatly improves spectral reconstruction when contrasted to the twin-RGB device, particularly on colorful objects. RGB histograms derived from standard image acquisition with an RGB sensor shows substantially less information.

Figure 2. Spectral analysis of a street scene. Three areas (1-2-3) in the image are selected. a) Reference RGB image; b-c-d) The reconstructed spectral signals with the light field multispectral device, a twin-RGB device compared with the ground truth. A neural network is trained for spectral reconstruction on both light field and twin-RGB reference devices. In brackets: deviation from ground truth summed over the entire spectrum. Red-green-blue histograms report raw RGB values of a single camera. Image Credit: CSEM (Swiss Center for Electronics and Microtechnology)

Conclusion

In conclusion, researchers have described an ultra-compact multispectral camera for smartphone cameras. Researchers demonstrated that it is capable of providing a more precise spectral reconstruction of colorful objects. Further steps include expanding its spectral range and training neural networks for applications other than color analysis.

References

- Patent application WO2022018484A1

- B. Gallinet, G. Quaranta, C. Schneider, (2021) "Narrowband transmission filters based on resonant waveguide gratings and conformal dielectric-plasmonic coatings", Advanced Optical Technologies., 10, pp. 31-38. Patent EP3617757B1.

This information has been sourced, reviewed and adapted from materials provided by CSEM.

For more information on this source, please visit CSEM's expertise on Photonics, Functional Surfaces, and Iot and Vision.