AI vision, like image processing with artificial intelligence, is a highly discussed topic. However, the potential of this sophisticated, new technology has not yet found application in certain fields, like industrial applications; therefore, long-term empirical values are limited.

Image Credit: IDS Imaging Development Systems GmbH

Although there are a number of embedded vision systems on the market that permit the use of AI in industrial settings, many facility operators are still hesitant to invest in one of these platforms and update their applications.

However, AI has already demonstrated innovative possibilities where rule-based image processing has run out of options and is lacking a solution. Thus, the question remains as to what is hindering the widespread uptake of this new technology.

One of the primary factors impeding technology is the hurdle called "user-friendliness." Anyone should be able to create their own AI-based image processing applications, even if they lack expert knowledge in artificial intelligence and application programming.

While artificial intelligence could speed up a variety of work processes and reduce sources of error, edge computing allows for the elimination of costly industrial computers and the complex infrastructure required for high-speed image data transmission.

New and Different

However, AI or machine learning (ML) operates in quite diverse ways from traditional, rule-based image processing. This has an impact on how image processing tasks are approached and handled.

The quality of the results is no longer controlled by a manually generated program code that is created by an image processing specialist, as was previously the case, but by the learning process of neural networks employed with sufficient image data.

To put it differently, the object features that must be inspected are no longer predefined by instructions but must be taught to the AI through the training process. Furthermore, the more diversified the training data, the more likely the AI/ML algorithms are to identify the qualities that are especially relevant later in operation.

With adequate knowledge and experience, what appears to be simple can lead to the achievement of the desired goal.

Errors will arise with this application if the user does not have a trained eye for the relevant image data. This implies that the abilities needed to work with machine learning approaches vary from those needed for rule-based image processing.

Moreover, not everybody has the time or resources to dive into the subject from the bottom up and develop a new set of fundamental skills for dealing with machine learning methods.

The biggest issue with new technology is transparency. If they provide outstanding outcomes with low effort but cannot be easily checked e.g. by reviewing code, it becomes difficult to believe and trust such a system.

Complex and Misunderstood

Logical thought would suggest that one might be interested in learning how this AI vision works. However, without solid explanations that are clearly understood, assessing results is difficult.

Acquiring confidence in new technology is typically built on skills and experience that must be gathered over time before understanding the full potential of what technology can accomplish, how it works, how to apply it, and how to appropriately manage it.

Making matters even more challenging is the notion that the AI vision is competing with an established system, for which the necessary environmental conditions have been created in recent years through the implementation of knowledge, training, documentation, software, hardware, and development environments.

The lack of clear insight into the inner workings of the algorithms, as well as incomprehensible results, are the other side of the coin that impedes their expansion.

(Not) A Black Box

Neural networks are sometimes misinterpreted as a black box whose judgments are unintelligible.

Although DL models are undoubtedly complex, they are not black boxes. In fact, it would be more accurate to call them glass boxes, because we can literally look inside and see what each component is doing.

The black box metaphor in machine learning

Neural network inference decisions are not based on a conventional set of rules, and the dynamic interactions of their artificial neurons may at first be challenging for humans to comprehend, but they are nevertheless the clear result of a mathematical system and thus reproducible and easily interpretable.

What is presently lacking are the necessary tools to facilitate proper evaluation. This field of AI has a lot of opportunities for advancement. This also shows how well the different AI systems available on the market can assist users in their goals.

Software Makes AI Explainable

IDS Imaging Development GmbH is focused on developing this field. One such outcome is the IDS NXT inference camera system. Statistical investigations using the so-called confusion matrix enable the construction and interpretation of the overall quality of a trained neural network.

Following the training procedure, the network can be validated using a previously determined set of images with known results. In a table, the expected outcomes and the real outcomes obtained via inference are compared.

This explains how frequently the test objects were successfully or erroneously recognized for each of the trained object classes. A general quality of the trained algorithm can subsequently be provided based on the hit rates generated.

Moreover, the matrix clearly illustrates where the recognition accuracy may still be inadequate for productive application. However, it does not provide a detailed explanation for this outcome.

Figure 1. This confusion matrix of a CNN classifying screws shows where the identification quality can be improved by retraining with more images. Image Credit: IDS Imaging Development Systems GmbH

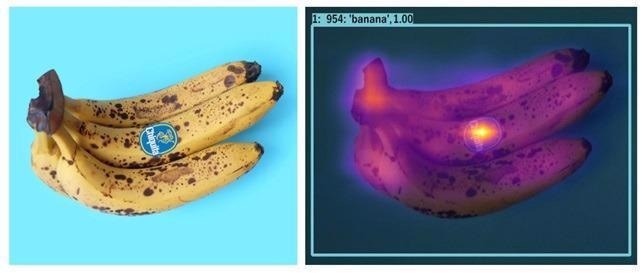

In this situation, the attention map is helpful as it displays a kind of heat map that indicates the regions or image contents that the neural network focuses on and utilizes to guide decisions.

This visualization form is engaged throughout the training process in the AI Vision Studio IDS NXT lighthouse in connection to the decision pathways that are established throughout the training; this enables the network to construct a heat map from each image while the analysis is still in process.

This suggests that the AI’s essential or unexplained actions are simpler to grasp, promoting a greater acceptance of neural networks in industrial settings.

It can also be used to identify and eliminate data biases, which would cause a neural network to make incorrect decisions during inference (see figure “attention maps”).

Poor input results in poor output. An AI system’s capacity to recognize patterns and estimate outcomes is dependent on getting relevant data from which it may learn the “correct behavior.”

If an AI is designed in a laboratory using data that is not typical of future applications, or worse, if the patterns in the data imply biases, the system will acquire and apply those preconceptions.

Figure 2. This heat map shows a classic data bias. The heat map visualizes a high attention on the Chiquita label of the banana and thus a good example of a data bias. Through false or under representative training images of bananas, the CNN used has obviously learned that this Chiquita label always suggests a banana. Image Credit: IDS Imaging Development Systems GmbH

Software tools allow users to trace the behavior and results of the AI vision and directly relate this back to weaknesses within the training data set and adjust them as necessary in a targeted manner. This makes AI more comprehensible and explainable for everyone, as it is essentially just statistics and mathematics.

Understanding mathematics and understanding it in the algorithm is not always simple, but tools like the confusion matrix and heat maps make decisions and explanations for decisions visible and thus comprehensible.

It is Only the Beginning

AI vision has the ability to enhance a variety of visual processes when employed effectively. However, hardware and software alone will not be adequate to get the industry to embrace AI. Manufacturers are frequently requested to share their knowledge in the form of user-friendly software and built-in procedures that guide individuals.

AI still has a long way to go to catch up to best practices in the field of rule based imaging applications, which are the outcome of years of effort and application to establish a loyal customer base through extensive documentation, transfer of knowledge, and several software tools. The great news is that such AI systems and supporting tools are already in development.

Standards and certifications are also currently being established to promote acceptability and make understanding AI simple enough to present to the big table. IDS is assisting with this.

With IDS NXT, any user group can quickly and efficiently apply an integrated AI system as an industrial tool — even without an extensive understanding of image processing, machine learning, or application programming.

This information has been sourced, reviewed and adapted from materials provided by IDS Imaging Development Systems GmbH.

For more information on this source, please visit IDS Imaging Development Systems GmbH.