It is difficult to deny that AI is already far superior to humans in many regards: it is robust, fast, almost error-free, and does not require rest breaks. This superiority is particularly crucial in situations where work processes must be reliably carried out at a consistent quality with high performance.

Image Credit: IDS Imaging Development Systems GmbH

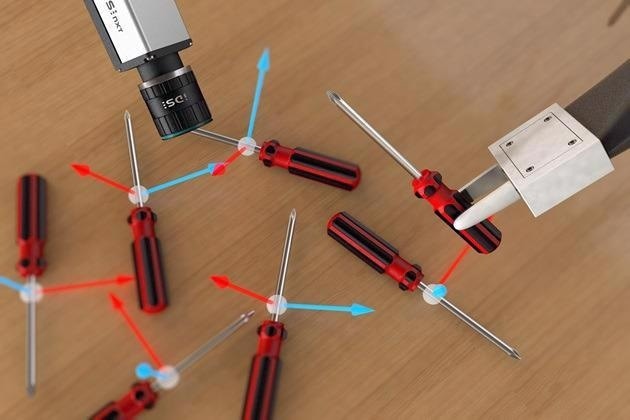

Making processes more effective and economical is one reason why AI finds use in the machine vision environment. The “Vision Guided Robot” use case demonstrates how a robot and an embedded AI vision camera can intelligently automate typical pick and place tasks, replacing the need for a PC.

For “smart gripping,” different disciplines have to work together. For instance, the products must be grasped and identified, analyzed, and localized beforehand if the task involves employing robots to arrange products by different shapes, materials, sizes, or qualities.

When it comes to rule-based image processing systems, especially in small batch sizes, this is not only very time-consuming but also uneconomical.

Robots, in combination with AI-based inference, can already be equipped with the necessary product knowledge and skills of an accomplished worker. It is safe to say that for specific subtasks, enormous technological advances are no longer required; all that is needed is for the appropriate products to collaborate effectively across disciplines to form a “smart robot vision system.”

EyeBot Use Case

In a production line, items are dispersed at random along a conveyor belt. The objects must first be identified, after which they must be chosen, possibly packaged, or transferred to a suitable station for additional processing or analysis, and urobots GmbH has created an object detection and robot control PC-based solution to help streamline this.

The highly trained AI model was able to identify the direction and placement of objects in camera images using this information to determine which grip coordinates a robot should use.

The next objective was to switch over to IDS Imaging Development Systems GmbH’s AI-based embedded vision system. According to urobots, two factors had to be taken into account when creating this solution:

- Without the need for specialized AI knowledge, the user needed to be able to quickly adapt the system for various use cases. The net result of this meant that function is possible even if, for example, something changes in production – including the appearance of objects, the lighting, or even if additional object types need to be integrated.

- To be cost-effective, lightweight, and space-saving, the system as a whole needed to be completely PC-free. This was accomplished through direct communication between device components.

IDS’ IDS NXT inference camera system meets both of these requirements.

All image processing runs on the camera, which communicates directly with the robot via Ethernet. This is made possible by a vision app developed with the IDS NXT Vision App Creator, which uses the IDS NXT AI core.

Alexey Pavlov, Managing Director, urobots GmbH

Pavlov added, “The Vision App enables the camera to locate and identify pre-trained (2D) objects in the image information. For example, tools that lie on a plane can be gripped in the correct position and placed in a designated place. The PC-less system saves costs, space, and energy, allowing for easy and cost-effective picking solutions.”

Position Detection and Direct Machine Communication

All of the objects in the image can be recognized, along with their locations and orientations, by a trained neural network. The AI can do this in situations where there are many natural variations, such as with food, plants, or other flexible objects, as well as in situations where there are fixed objects that always have the same appearance.

As a result, the objects are able to recognize their orientation and assume a very stable position. Finally, it was uploaded to the IDS NXT camera after the network had been trained for the customer by urobots GmbH using its own software.

This stage required the network to be translated into a special optimized format that resembled a kind of “linked list.”

The IDS NXT ferry tool provided by IDS made porting the trained neural network for use in the inference camera very easy. Throughout the process, each layer of the CNN network becomes a node descriptor that accurately describes each layer. The end result is a complete concatenated list of the CNN, represented in binary.

The CNN accelerator IDS NXT deep ocean core, designed specifically for the camera and built on an FPGA, can then optimally execute this universal CNN.

The vision app created by urobots is then used to calculate a robot’s optimal grip based on the detection data, though this did not solve the problem. In addition to the outcomes of what, where, and how to grip, direct communication between the IDS NXT camera and the robot had to be established.

It is crucial that this task is not underestimated, as it is often the key factor in determining how much money, time, and labor must be put into a solution. The camera’s vision app was developed by urobots using the IDS NXT Vision App Creator, and an XMLRPC-based network protocol was used to transmit the concrete work instructions to the robot.

The final AI vision app is able to detect objects in as little as 200 milliseconds with a positional accuracy of +/– 2 degrees.

Figure 1. The neural network in the IDS NXT camera localizes and detects the exact position of the objects. Based on this image information, the robot can independently grasp and deposit them. Image Credit: IDS Imaging Development Systems GmbH.

PC-Less: More Than Merely Artificially Intelligent

This use case is intelligent in more ways than just artificial intelligence. Two additional intriguing features make it possible for this solution to function without a second PC. The first is that the PC hardware and all of its supporting infrastructure can be eliminated, as the camera itself produces image processing results rather than just delivering images.

Naturally, this reduces the system’s acquisition and maintenance costs in the long run.

It is also important that process decisions are made in enough time or right away at the production site. As a result, the processes that come after can run more quickly and without delay, which in some cases also allows for an increase in clock rate.

The costs associated with development are yet another intriguing aspect. AI vision, or the training of the network, differs from traditional rule-based, classical image processing, which alters how image processing tasks are handled and approached.

The quality of the output is no longer determined by manually written program code that is created by application developers and image processing specialists. In other words, if an application can be solved with AI, IDS NXT can also save the relevant user both time and money.

This is due to the fact that each user is able to train a neural network, prepare the appropriate vision app, and complete it on the camera, thanks to the user-friendly and all-encompassing software environment.

Summary

This EyeBot use case has illustrated the potential of computer visions and how they can be used for embedded AI vision applications without the need for a computer.

The small embedded system has additional benefits, including expandability through the vision app-based concept, the development of applications for different target groups, and end-to-end manufacturer support.

In EyeBot, the competences of an application are clearly distributed. While IDS and urobots concentrate on teaching and managing the AI to achieve image processing and controlling the robot, the user’s attention can remain on the product at hand.

Through Ethernet-based communication and the open IDS NXT platform, the vision app can also be easily modified for other objects, other robot models, and for many applications of a similar nature.

Image Credit: IDS Imaging Development Systems GmbH

This information has been sourced, reviewed and adapted from materials provided by IDS Imaging Development Systems GmbH.

For more information on this source, please visit IDS Imaging Development Systems GmbH.