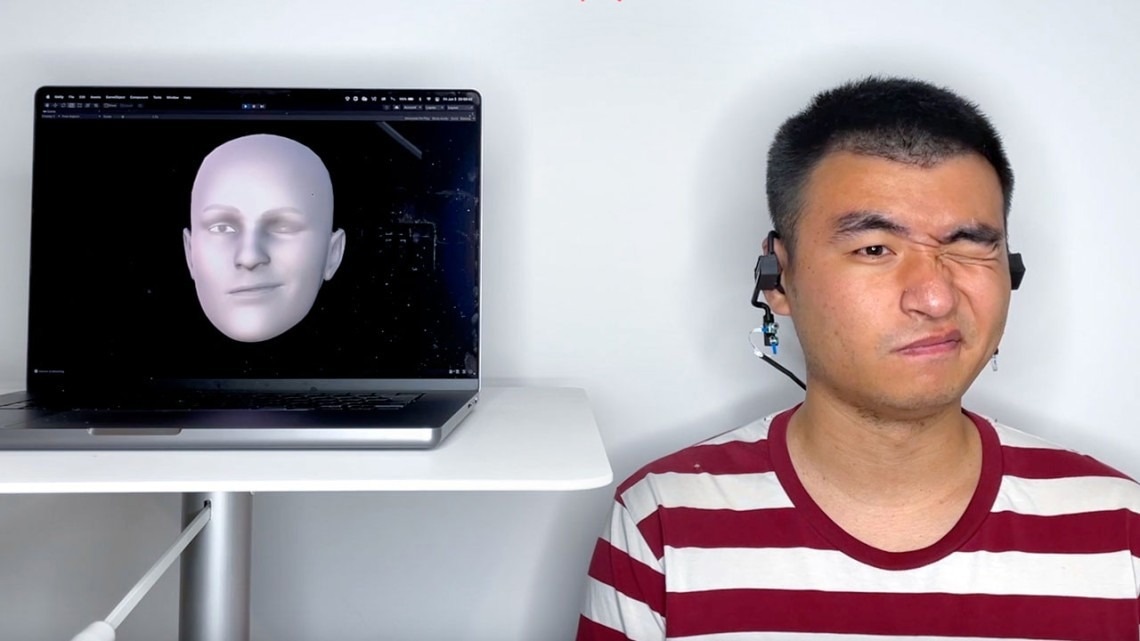

Scientists from Cornell University have come up with a wearable earphone device — or so-called “earable” — that bounces sound off the cheeks and converts the echoes into an avatar of a person’s entire face that is moving.

The earable performs as well as camera-based face tracking technology but uses less power and offers more privacy. Image Credit: Ke Li/Provided.

The earable performs as well as camera-based face tracking technology but uses less power and offers more privacy. Image Credit: Ke Li/Provided.

The system, named EarIO, was designed by a research team headed by Cheng Zhang, assistant professor of information science, and François Guimbretière, professor of information science, both in the Cornell Ann S. Bowers College of Computing and Information Science.

It helps transmits facial movements to a smartphone in real-time and is compatible with commercially available headsets for hands-free and cordless video conferencing.

Devices that have the potential to track facial movements by making use of a camera are “large, heavy and energy-hungry, which is a big issue for wearables. Also importantly, they capture a lot of private information,” stated Zhang, principal investigator of the Smart Computer Interfaces for Future Interactions (SciFi) Lab.

Facial tracking that happens through acoustic technology has the potential to provide improved affordability, comfort, privacy, and battery life.

The team outlined their device in the journal Proceedings of the Association for Computing Machinery on Interactive, Mobile, Wearable and Ubiquitous Technologies.

The EarIO functions like a ship that sends out pulses of sonar. A speaker present on each side of the earphone transmits acoustic signals to the sides of the face, and a microphone will pick up the echoes.

As wearers smile, talk, or raise their eyebrows, the skin tends to move and stretch, altering the echo profiles. A deep learning algorithm put forth by scientists makes use of artificial intelligence to constantly process the data and then translate the shifting echoes into full facial expressions.

Through the power of AI, the algorithm finds complex connections between muscle movement and facial expressions that human eyes cannot identify. We can use that to infer complex information that is harder to capture—the whole front of the face.

Ke Li, Study Co-Author and Doctoral Student, Field of Information Science, Cornell University

Previous measures are taken by the Zhang laboratory to track facial movements with the help of earphones with a camera that recreated the full face depending on cheek movements as seen from the ear.

By gathering sound rather than data-heavy images, the earable has the potential to communicate with a smartphone via a wireless Bluetooth connection. This helps retain the information of the users in a private manner. Along with images, there is a need for the device to connect to a Wi-Fi network and transmit the data back and forth to the cloud, possibly making it susceptible to hackers.

“People may not realize how smart wearables are—what that information says about you, and what companies can do with that information,” stated Guimbretière.

With the face images, someone could also gather actions and emotions.

Guimbretière added, “The goal of this project is to be sure that all the information, which is very valuable to your privacy, is always under your control and computed locally.”

Also, using acoustic signals take less energy compared to recording images, and the EarIO utilizes 1/25 of the energy of one more camera-based system that was previosuly developed by the Zhang lab. At present, the earable tends to last nearly three hours on a wireless earphone battery. However, future research will concentrate on prolonging the use time.

The scientists tested the device on 16 participants and utilized a smartphone camera to confirm the precision of its face-mimicking performance. Initial experiments display that it functions while users are sitting and walking around and that road noise, wind, and background discussions do not intervene with its acoustic signaling.

In future versions, the scientists hope to enhance the potential of the earable to tune out nearby noises and other interruptions.

The acoustic sensing method that we use is very sensitive. It’s good, because it’s able to track very subtle movements, but it’s also bad because when something changes in the environment, or when your head moves slightly, we also capture that.

Ruidong Zhang, Study Co-Author and Doctoral Student, Information Science, Cornell University

The technology has one limit, and that is before the first use, the EarIO must gather 32 minutes of facial data for the algorithm to be trained.

Eventually we hope to make this device plug and play.

Ruidong Zhang, Study Co-Author and Doctoral Student, Information Science, Cornell University

The study was funded by Cornell Bowers CIS.

Journal Reference:

Li, K., et al. (2022) EarIO: A Low-power Acoustic Sensing Earable for Continuously Tracking Detailed Facial Movements. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. doi.org/10.1145/3534621.

Source: https://www.cornell.edu/