For the last four years, we have been working on a new method to add the missing depth dimension to standard image sensors. Image sensors, and in particular CMOS image sensors, have improved significantly over the last several decades.

We now have billions of such sensors everywhere, including on our smartphones. However, these sensors can only see the world in 2D, which means they only capture the brightness and color per pixel.

3D refers to depth and is critical for improved perception of our environment. There is no straightforward way to add this missing depth dimension to these ubiquitous sensors. Instead, you need specialized, typically expensive systems, referred to as lidar, to see in 3D.

We have recently demonstrated that you can add simple optical components to any digital camera and allow the camera to see the world in 3D.

To accomplish this, we have invented a new optical modulator with record-setting energy efficiency.

What makes our work attractive is that this modulator is simple to manufacture and integrates easily with any camera.

What is the significance of developing sensors that can view light in 3D? Are there any specific future technologies or applications this advancement could unlock?

Existing standard image sensors can only perceive the world in 2D (brightness and color). However, having the capability to measure the distance per pixel allows for enhanced perception of the environment. This is especially critical for autonomous systems, including self-driving vehicles and drones.

We are witnessing increased automation, and these platforms need to perceive their environment well to navigate safely in dynamic environments. Standard image sensors, especially CMOS, are high performance (tens of megapixels of resolution) but cannot perceive the depth dimension. Our technology could enhance the imaging performance of these sensors by adding the missing 3D information.

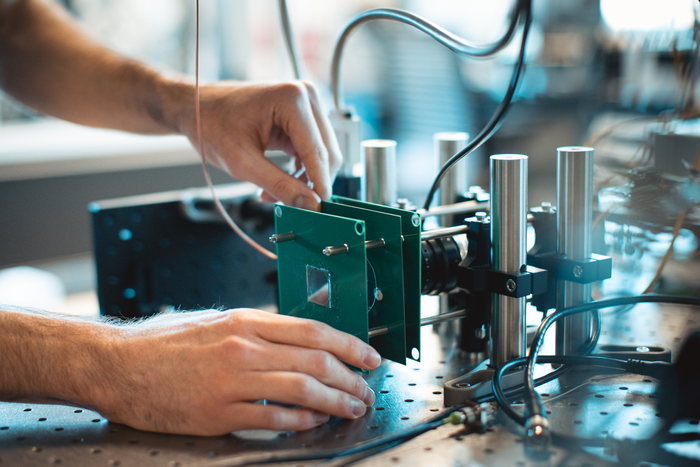

The lab-based prototype lidar system that the research team built, which successfully captured megapixel-resolution depth maps using a commercially available digital camera. © Andrew Brodhead

How is megapixel-resolution lidar defined, and why is it so difficult to obtain?

One key metric for any lidar system is the number of points it makes the distance measurement at – the technical term for this is spatial resolution. The number of pixels that people refer to for their smartphone cameras is this number.

Although we have become accustomed to megapixel resolution with our daily use of cameras, it is challenging to achieve this resolution in lidar systems. The most commonly used lidar systems are scanning lidars, where a laser beam is scanned across a scene to measure the distance of each point. Although light travels extremely fast, it is still not fast enough to scan millions of points with a high refresh rate. This limits the spatial resolution of scanning lidar systems.

On the other hand, lidar systems that operate similar to a camera (flash lidar) do not have this scanning problem, but they face another issue – scalability. It is not easy to manufacture millions of specialized pixels for depth sensing at scale, making it difficult to achieve megapixel resolution in lidar cost-effectively.

What is 'acoustic resonance', and what role did it play in achieving a 3D image sensor system?

Acoustic resonance refers to the amplification of an acoustic wave in any system where the excitation frequency matches one of the system's natural frequencies. Acoustic resonance is critical for our system since it dramatically reduces the power required to operate our modulator. Our modulator would require kilowatts of power without acoustic resonance, making it impractical. Relying on acoustic resonance, our modulator requires approximately 1 watt to operate.

What industrial sectors would benefit the most from the results of your research? Could this advancement be available to consumers one day?

The industrial sectors that would benefit from this research are generally any setting where a camera is used. Specific examples would be robotics, drones, security cameras, and smartphone cameras.

We are still improving the device and system, and we believe it could be available to consumers one day with improvements.

What are the next steps for this area of research?

We are working on increasing the modulation frequency (related to ranging accuracy) for our modulator while also bringing down the power required to operate it. We will need to see how much we can push the device's performance, but we do not have any major obstacles at the moment.

In your opinion, what was the most exciting aspect of this investigation?

For me, it was witnessing the modulator working for the first time. We had theory and simulation for the first two years, but it was a magical moment observing that the theory and simulation framework that we had built predicted the modulator performance extremely well.

About Okan Atalar

Okan Atalar earned a bachelor's degree in Electrical and Electronics Engineering from Bilkent University in 2016, and a master's degree in Electrical Engineering from Stanford University in 2018. Since 2016, he has been pursuing a Ph.D. in the Electrical Engineering department at Stanford University. His research is focused on developing the next-generation optical depth-sensing technology.

Okan Atalar earned a bachelor's degree in Electrical and Electronics Engineering from Bilkent University in 2016, and a master's degree in Electrical Engineering from Stanford University in 2018. Since 2016, he has been pursuing a Ph.D. in the Electrical Engineering department at Stanford University. His research is focused on developing the next-generation optical depth-sensing technology.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.