Advances in deep learning are opening up new fields of application for industrial image processing, applications that were historically only viable with considerable effort or not at all.

This novel approach to image processing is fundamentally different from classic methods, prompting an array of challenges for users. IDS has developed an all-in-one embedded vision solution that enables any user to implement AI-based image processing using a camera as an embedded inference system.

This can be achieved quickly, easily and without advanced programming knowledge, meaning that deep learning is now much more accessible and user-friendly.

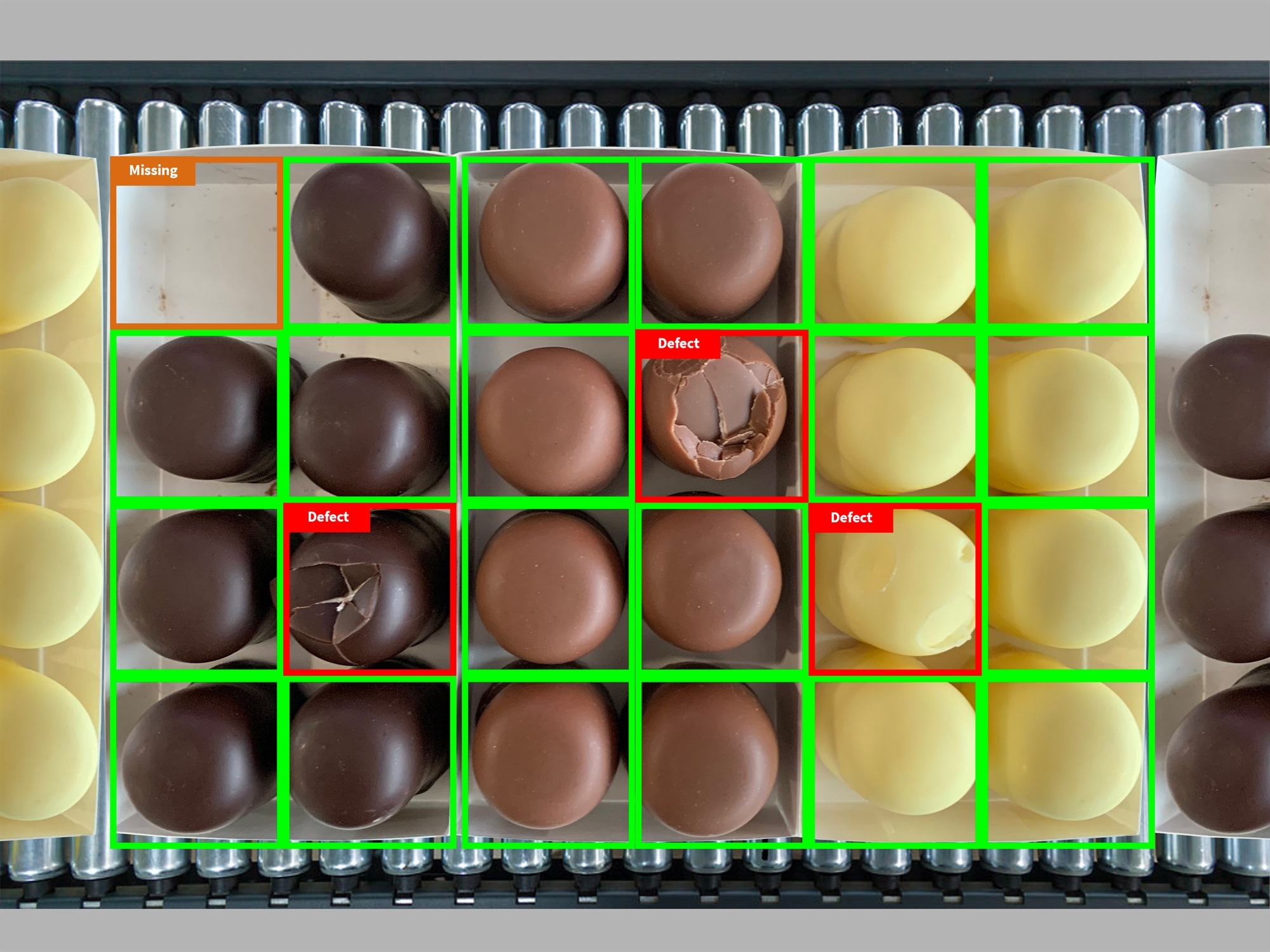

Nowadays, computer vision and image processing solutions are indispensable in a diverse array of industries and sectors. Image processing systems are often required to accommodate a constantly growing range of products, variants and organic objects; for example, plants, fruit, or vegetables.

Artificial intelligence can easily handle varying object conditions. Image Credit: IDS Imaging Development Systems GmbH

Conventional approaches utilizing rule-based image processing face significant limitations in applications where image data being analyzed varies frequently or exhibits differences that are difficult or impossible to describe via algorithms. Robust automation is not feasible in these cases due to an inflexible set of rules.

This is often the case for tasks that would be easy for a human being to solve. For example, a child would be able to recognize a car, even if they had never seen that particular model before. It would be enough that the child had seen other car models and types previously.

Machine learning offers the ability to make flexible and independent decisions, and this can now be transferred to image processing systems. Neural networks and deep learning algorithms mean it is now possible for a computer to see objects, recognize these objects, and draw conclusions from what it has learned.

This process functions much like a human being would learn, with intelligent automation learning and making decisions based on empirical values.

Differences to Classic Image Processing

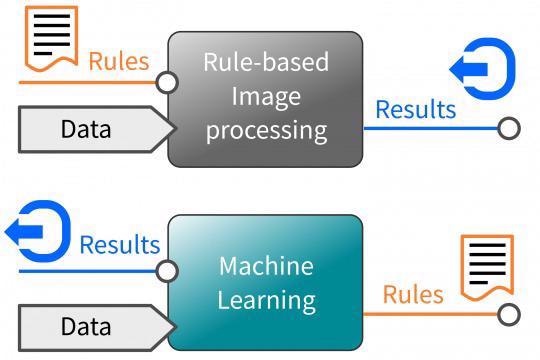

The primary difference between deep learning and rule-based image processing is how and by whom image characteristics are identified and how this learned knowledge is then represented.

The classic symbolic approach would involve an image processing specialist selecting the image features that are decisive before describing these according to specific rules.

The software can only recognize what the rules cover, so many lines of source code are required to provide sufficient detail to complete a specific task. The subsequent execution will take place within defined limits, and there is no room for interpretation – all intellectual considerations are the responsibility of the image expert.

Working with neural networks is considerably different from this rule-based approach. Neural networks have the ability to independently learn which image characteristics are important in order to draw the most appropriate conclusion.

This can be considered to be a non-symbolic approach because the knowledge is implicit and does not allow insight into the solutions learned. The characteristics that are stored, their weighting and any conclusions are drawn will only be impacted by the content and quantity of the training images.

Deep learning algorithms are able to recognize and analyze the whole image content, relating recognized characteristics to terms that must be learned depending on how frequently these occur.

The statistical frequency of these characteristics can be understood as ‘experience’ during training.

Machine Learning: teach through examples. Image Credit: IDS Imaging Development Systems GmbH

Google's artificial intelligence specialist Cassie Kozyrkov described machine learning as a programming tool at the 2019 WebSummit in Lisbon, conceptualizing this as a tool for teaching a machine using examples rather than explicit instructions.

The development of machine vision applications based on AI should therefore be reconsidered. The quality of results – for example, the speed and reliability of object detection – is dependent on what a neural network is able to detect and conclude.

The knowledge of the professional setting up the network is key because they are required to provide the necessary data sets for training, including as many different example images as possible which feature the terms to be learned.

With the classic approach, this responsibility fell to an image processing specialist. In machine learning, however, this is the responsibility of a data specialist.

The Challenges of Developing new AI Applications

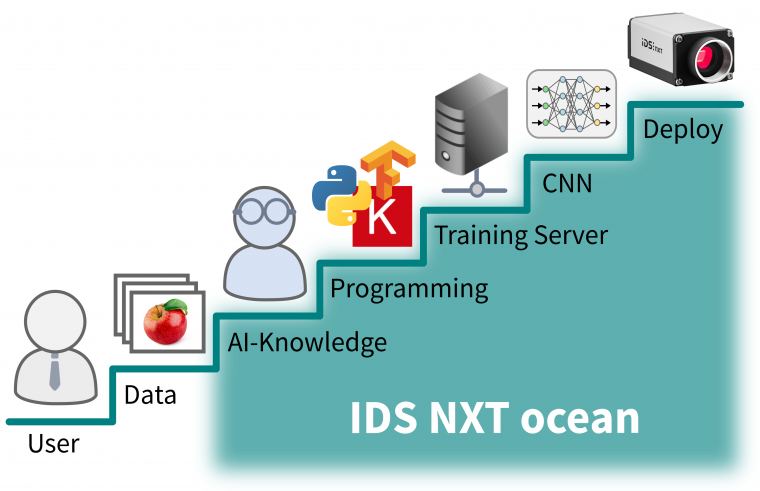

Dividing the development of an AI application into individual steps will reveal tasks and concepts which are wholly different from the development stages of the classic approach.

Handling and preparing image data and the process of training neural networks necessitate the use of completely new tools and development frameworks, each of which should be installed and executed on a suitable PC infrastructure.

Instructions and open source software are generally freely available on platforms such as Github or from other cloud providers, but these will include basic tools which require a high degree of experience to properly operate.

Using these open source tools, the creation, execution and evaluation of training results on a suitable hardware platform require knowledge and understanding of hardware, software and interfaces between these two application layers.

Lower entry barrier with easy-to-use tools. Image Credit: IDS Imaging Development Systems GmbH

A Machine Learning All-in-One Solution

IDS’ goal is to support the user from their first steps using this new technology. In order to do this, IDS offers a deep learning experience and high-end camera technology in a single all-in-one inference camera solution.

Anyone can start working with AI-based image processing immediately thanks to IDS’ NXT ocean, a system that lowers the barrier to entry for AI imaging applications.

A series of easy-to-use tools can be used to create inference tasks in a matter of minutes with very little prior knowledge. These tasks can then be immediately executed on a camera.

The system is based on three key components:

- User-friendly neural network training software

- An intelligent camera platform

- An AI accelerator that executes the neural networks on the hardware

Each of these components has been developed by IDS to work together seamlessly, ensuring user-friendliness while making the overall system very powerful.

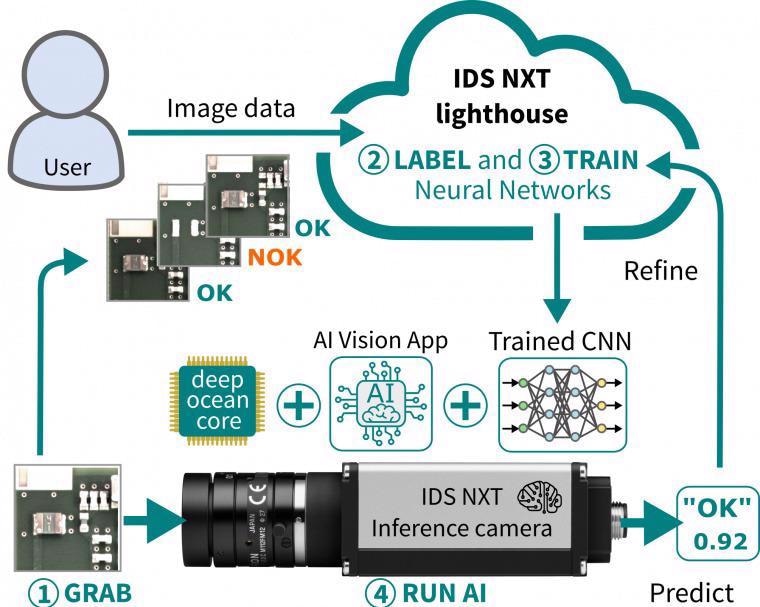

IDS NXT lighthouse is a cloud-based training package that guides users through the process of data preparation and onto training the neural network. There is no requirement for the user to engage with advanced tools or deal with the installation of development environments.

As a web application, IDS NXT lighthouse is immediately ready for use. An intuitive workflow, storage space and appropriate training performance are all included; a user simply needs to log in, upload and label training images, and then train the desired network as required.

Users also benefit from the reliable data center and robust network architecture of German servers operated by Amazon Web Services (AWS), ensuring the utmost levels of data protection and security.

Users can specify applications’ speed, and accuracy requirements via simple dialogs and configuration settings before IDS NXT lighthouse independently selects the network and sets up the necessary training parameters.

Training results provide the user with an indication of the quality of the trained intelligence, allowing them to modify and repeat the training process as required.

The IDS lighthouse system is continuously upgraded and improved, and its web-based delivery means that the latest version is always available with no requirement for users to manage updates or maintenance.

The training software employs supervised learning to train neural networks, with deep learning algorithms learning via predefined pairs of inputs and outputs. The user is required to provide the correct function value for an input by assigning the appropriate class to an example image.

The network is able to make associations independently. It does this by making predictions around image data in the form of percentages, with higher values representing a more accurate and reliable prediction.

Fully trained neural networks can be uploaded and executed directly on the IDS NXT cameras without additional programming, meaning that the user has immediate access to a fully functional embedded vision system able to see, recognize and derive results from captured image data.

Machines can also be controlled directly thanks to the cameras’ digital interfaces, such as REST and OPC UA.

Seamless interaction of soft- and hardware. Image Credit: IDS Imaging Development Systems GmbH

Embedded Vision Hybrid System

IDS has successfully developed an AI core for the FPGA of the intelligent IDS NXT camera platform.

Deep Ocean Core is able to execute pre-trained neural networks on a hardware-accelerated basis, turning industrial cameras into high-performance inference cameras and offering useful artificial intelligence capabilities in industrial environments.

Image analysis is performed on a decentralized basis, therefore avoiding potential bandwidth bottlenecks during transmission.

These capabilities allow cameras based on the IDS NXT platform to keep pace with modern desktop CPUs, offering comparable accuracy and speed of results while simultaneously requiring significantly less space and energy consumption.

The FPGA is relatively easy to reprogram, offering additional advantages in terms of future security, reduced time to market and low recurring costs.

Extendable funtionality using apps and CNNs. Image Credit: IDS Imaging Development Systems GmbH

Using a combination of IDS' own software and hardware allows users to select the target inference time prior to training. IDS NXT lighthouse will then consider optimal training settings, factoring in the AI core performance of the camera.

The user can easily anticipate any issues during the subsequent execution of the inference, removing the need for time-consuming retraining or readjustment.

Once it has been integrated, the IDS NXT system will maintain compatible, consistent behaviour – an essential consideration when working with industrially certified applications.

Extendable Functionality via Apps and CNNs

The embedded vision platform’s robust and powerful hardware allows it to offer much more than simply an inference camera for executing neural networks. The CPU-FPGA combination offers a feature set that can be expanded according to application requirements.

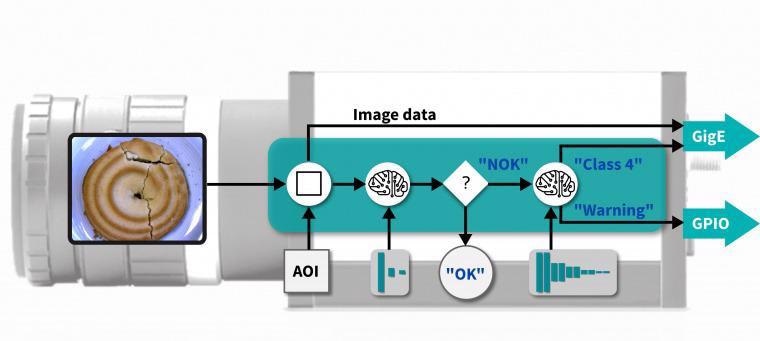

Recurring vision tasks can be set up and changed with ease, even enabling the execution of a wholly flexible image processing sequence. Captured images can be initially pre-processed; for example, a simple classification process to differentiate between good and bad parts.

If errors are detected, a complex and comprehensive neural network can be reloaded in milliseconds in order to determine the class of error in much more detail before transferring the results to a database.

An app development kit allows the easy implementation of customized solutions, allowing users to create their own individual vision apps before installing and running these apps on IDS NXT cameras.

IDS NXT cameras have been designed to function as hybrid systems, enabling both pre-processing of image data, with classic image processing and feature extraction via neural networks side by side. This allows them to efficiently run a range of image processing applications on a single device.

Summary

IDS NXT ocean‘s perfectly matched hardware and software combination has unlocked the potential of meaningful, user-friendly deep learning applications for a wide range of industries. Automated, intelligent detection tasks are now simpler than ever in a number of fields, with many applications now possible for the very first time.

AI-based image processing solutions can now be created and executed without programming knowledge, with the cloud-based IDS NXT lighthouse training software allowing storage space and training performance to be scaled to user requirements.

The latest version of the software is always available for every user, with no updates or maintenance periods required.

IDS also offers an inference starter package with all the required components to facilitate the first step into AI-based image processing. A camera is provided with a power supply and lens, as well as a training license for IDS NXT lighthouse.

This information has been sourced, reviewed and adapted from materials provided by IDS Imaging Development Systems GmbH.

For more information on this source, please visit IDS Imaging Development Systems GmbH.