Jun 30 2020

Bioengineers from the University of California, Los Angeles (UCLA) have developed a glove-like device that uses a smartphone app to translate American Sign language into English speech in real time.

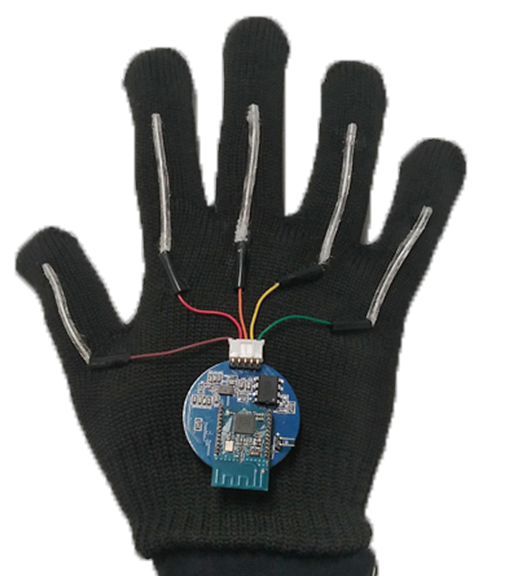

The system includes gloves with thin, stretchable sensors that run the length of each of the five fingers. Image Credit: Jun Chen Lab/UCLA.

The system includes gloves with thin, stretchable sensors that run the length of each of the five fingers. Image Credit: Jun Chen Lab/UCLA.

The study was published in the Nature Electronics journal.

Our hope is that this opens up an easy way for people who use sign language to communicate directly with non-signers without needing someone else to translate for them. In addition, we hope it can help more people learn sign language themselves.

Jun Chen, Assistant Professor, Bioengineering, Samueli School of Engineering, University of California, Los Angeles

Chen is also the principal investigator of the study.

The system contains a pair of gloves implanted with thin, stretchable sensors running to the length of each finger of the glove. Such sensors are made of electrically conducting yarns and pick up hand motions and finger placements that denote separate phrases, words, numbers, and letters.

The device converts the movements of fingers into electrical signals, which are transmitted to a dollar-coin–sized circuit board that is worn on the wrist. The board performs wireless transmission of the signals to a smartphone that translates them into spoken words at the rate of around one-word for every second.

In addition, the scientists added adhesive sensors to testers’ faces—on one side of their mouths and in between their eyebrows—to pick up facial expressions that form part of the American Sign Language.

According to Chen, earlier wearable systems that provided translation from American Sign Language were restricted by heavy and bulky device designs or were not so comfortable to wear.

The device newly designed by the UCLA researchers is made of lightweight, low-cost but long-lasting and stretchable polymers. Moreover, the electronic sensors are very cheap and flexible.

The team tested the device by working with four people who are deaf and use American Sign Language. Every hand gesture was repeated 15 times by the wearers. Such gestures were changed into words, numbers, and letters they represented with the help of a custom machine-learning algorithm. The system identified 660 signs, such as every letter of the alphabet and numbers 0 to 9.

Besides Chen, the UCLA authors of the study are co-lead author Zhihao Zhao, Kyle Chen, Songlin Zhang, Yihao Zhou, and Weili Deng. All of them are members of Chen’s Wearable Bioelectronics Research Group at UCLA. Jin Yang from China’s Chongqing University is the other corresponding author of the study.

A patent has been filed by UCLA on the technology. According to Chen, a commercial model based on this technology would need extra vocabulary and even quicker translation time.

Journal Reference:

Zhou, Z., et al. (2020) Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nature Electronics. doi.org/10.1038/s41928-020-0428-6.

Video Credit: UCLA.